20 Reliability of Dyslexia Prediction and Subtyping

Many dyslexia screening initiatives require the use of specific measures such as Rapid Automatized Naming (RAN; see Section 10.2.1) and measures of visual processing (RVP; see Section 10.2.2). The following sections report the reliability of ROAR measures that were specifically designed for dyslexia prediction and subtyping (see Section 10.2 for more information and a theoretical background on the measures).

20.1 Reliability of ROAR Rapid Automatized Naming (ROAR-RAN)

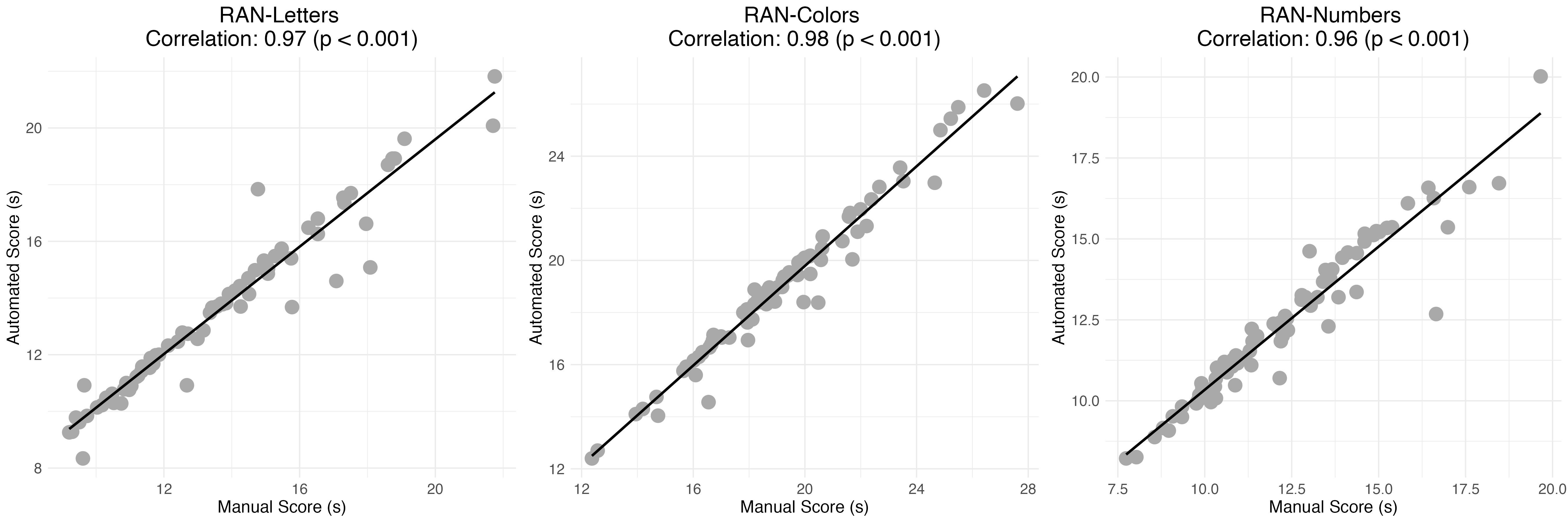

Reliability and evidence of construct validity was assessed based on correlations among scores across RAN-Letters, RAN-Colors and RAN-Numbers. The assessment was conducted in two stages: first, measuring the reliability of the automated scoring system relative to the manual scoring method, and second, analyzing the correlation between the three RAN measures using the automated scores.

20.1.1 Automated Scoring Reliability

We assessed the reliability of our automated scoring system by comparing it to manual scoring in a sample of 100 participants. In the manual process, the duration of each task was measured by manually timing from the start of the first spoken word and the end of the last spoken word. In the automated process, the duration was determined using start and end timestamps generated by our automatic speech recognition model.

The correlation between manual and automated scoring was calculated for each RAN task, with each task achieving a Pearson correlation coefficient greater than 0.95 (see Figure 20.1). These strong correlations suggest that the automated scoring system reliably produces scores that are nearly identical to manual scoring.

20.1.2 Correlation Among ROAR-RAN Measures

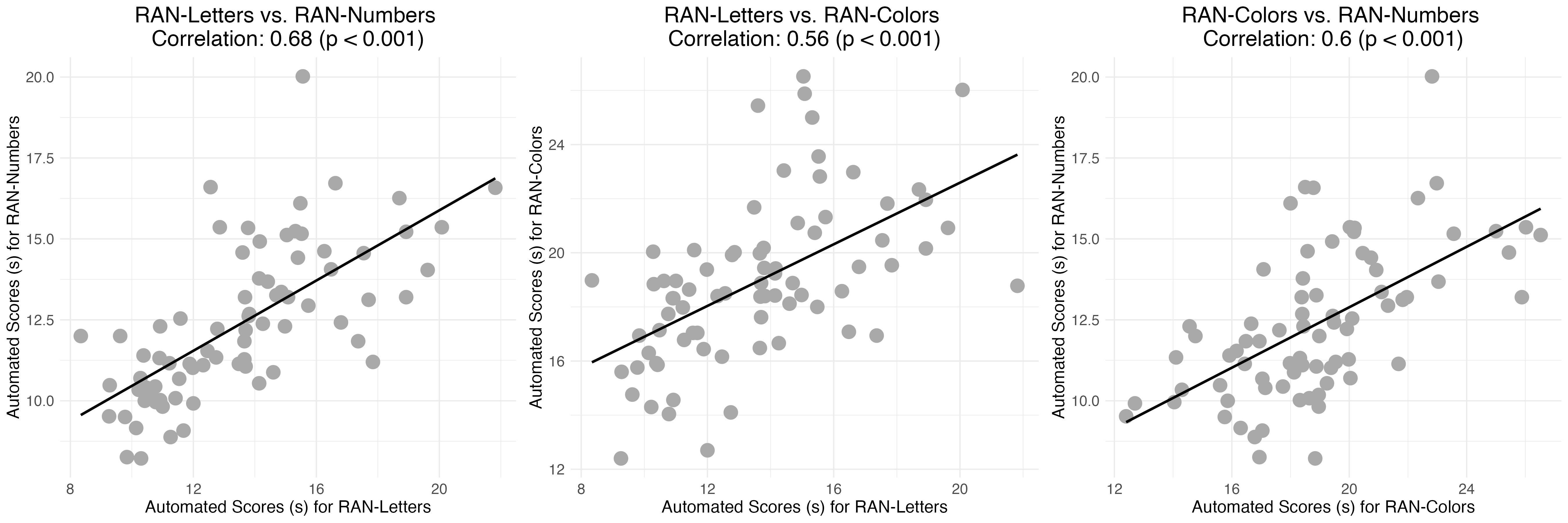

Next, we examined the correlation between the three RAN measures—RAN-Letters, RAN-Colors, and RAN-Numbers—using the automated scores. This analysis aimed to confirm the construct validity of the ROAR-RAN tasks by evaluating the relationships among these measures. All three measures are designed to tap into the same latent construct, though color naming is not as automatized as letter naming and, thus, we expect a lower correlation for RAN-Colors.

We calculated Pearson correlations between the automated scores for all participants across the following pairs of tasks: RAN-Letters and RAN-Numbers, RAN-Letters and RAN-Colors, and RAN-Colors and RAN-Numbers. Figure 20.2 shows these correlations providing evidence that each measure is reliably tapping in to a similar latent construct.

20.2 Reliability of ROAR Rapid Visual Processing (ROAR-RVP)

20.2.1 Background: Published studies

The rapid visual processing paradigm that was administered to older children between 7-17 years (Ramamurthy, White, and Yeatman 2024) was translated for a younger population and a computer adaptive testing algorithm was implemented see 4 in order to improve reliability and ensure the task spans a broad age range. We translated the task for a younger population by iteratively changing the task and the design while administering it to small groups of kindergarten and first graders within the Multitudes study sample population. This work was done through collaboration between the ROAR team at Stanford University and the Multitudes Team at UCSF. The final versions are tailored to Kindergarten and first grade children but spans through adulthood. Details of the design and validation process are published in Ramamurthy et al. (2024).

20.2.2 Data informed design changes to achieve high reliability in young children

For the RVP measure item difficulty depends on a variety of task parameters. There are two important task parameters that have the potential to influence performance: 1) encoding time and 2) string length.

20.2.2.1 Study 1 (N = 56)

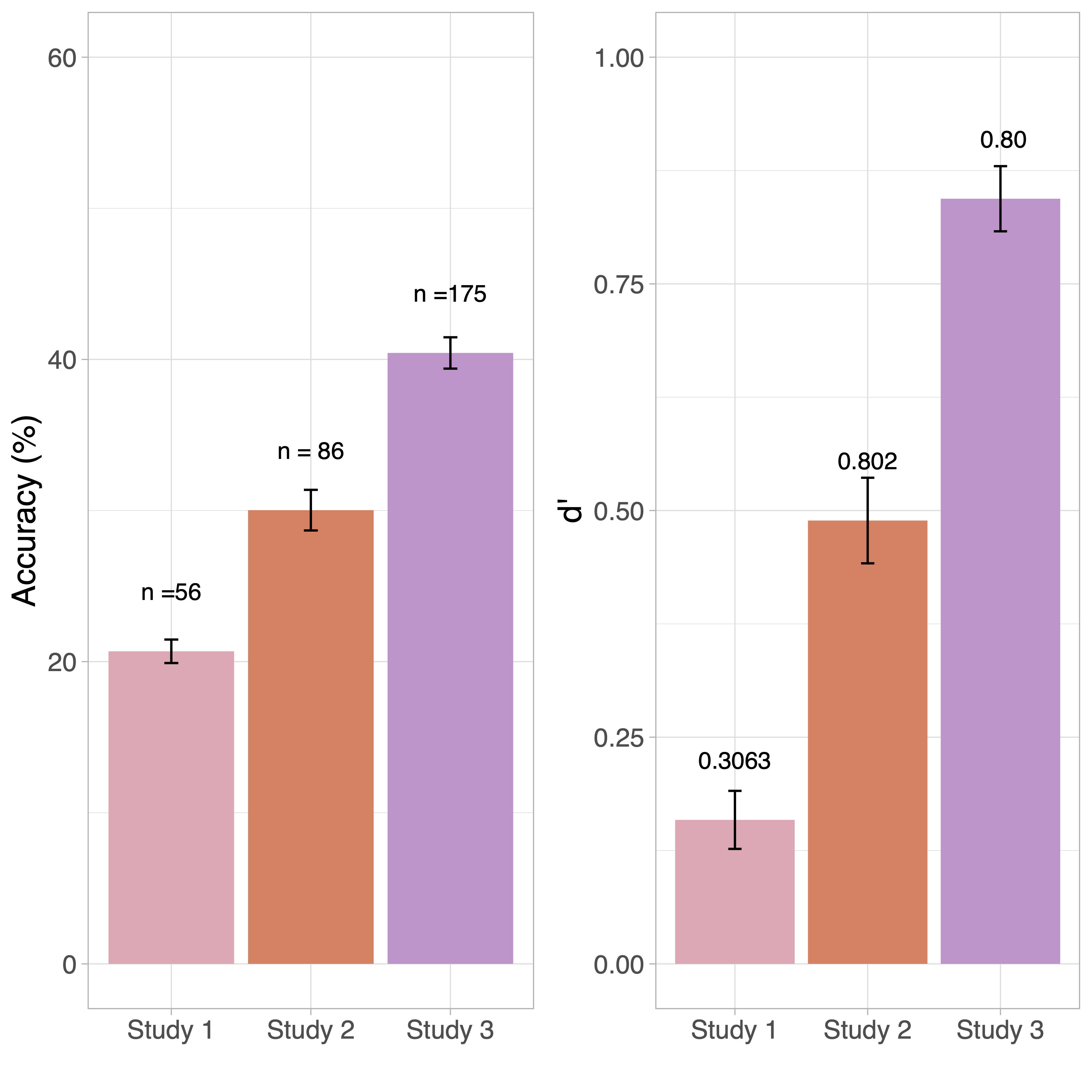

We first administered the task with similar parameters as were used in our previous study of older children and adults (Ramamurthy, White, and Yeatman 2024) (encoding time of 120ms and string length of 6 letters). We observed that K/1 children’s task performance was significantly lower with an encoding time of 120ms (16.813% +/- 7.654) with very low reliability (Spearman Brown corrected split half reliability: 0.075) compared to the older cross-sectional population reported in our previous work (37.148% +/- 1.191; reliability: 0.8). However, performance increased with an encoding time of 240 (21.125%+/- 8.371) and 480 ms (24.107% +/- 9.851; d’: 0.269). Notably, even at 480ms many participants still performed at chance and reliability was still low (Spearman Brown corrected split half reliability was 0.306).

20.2.2.2 Study 2 (N = 86)

We tested how performance changes in trials with four elements (2 on either side of fixation) and six elements (3 on either side of fixation) with encoding times of 240 ms and 480 ms. We observed that there was an overall improvement in task performance in Study 2 [Mean accuracy: 30.025 +/- 1.344] compared to the overall task performance from Study 1 [Mean accuracy: 20.685 +/-0.777; Mean d’: 0.159+/-0.032 ].

20.2.2.3 Study 3 (N= 175)

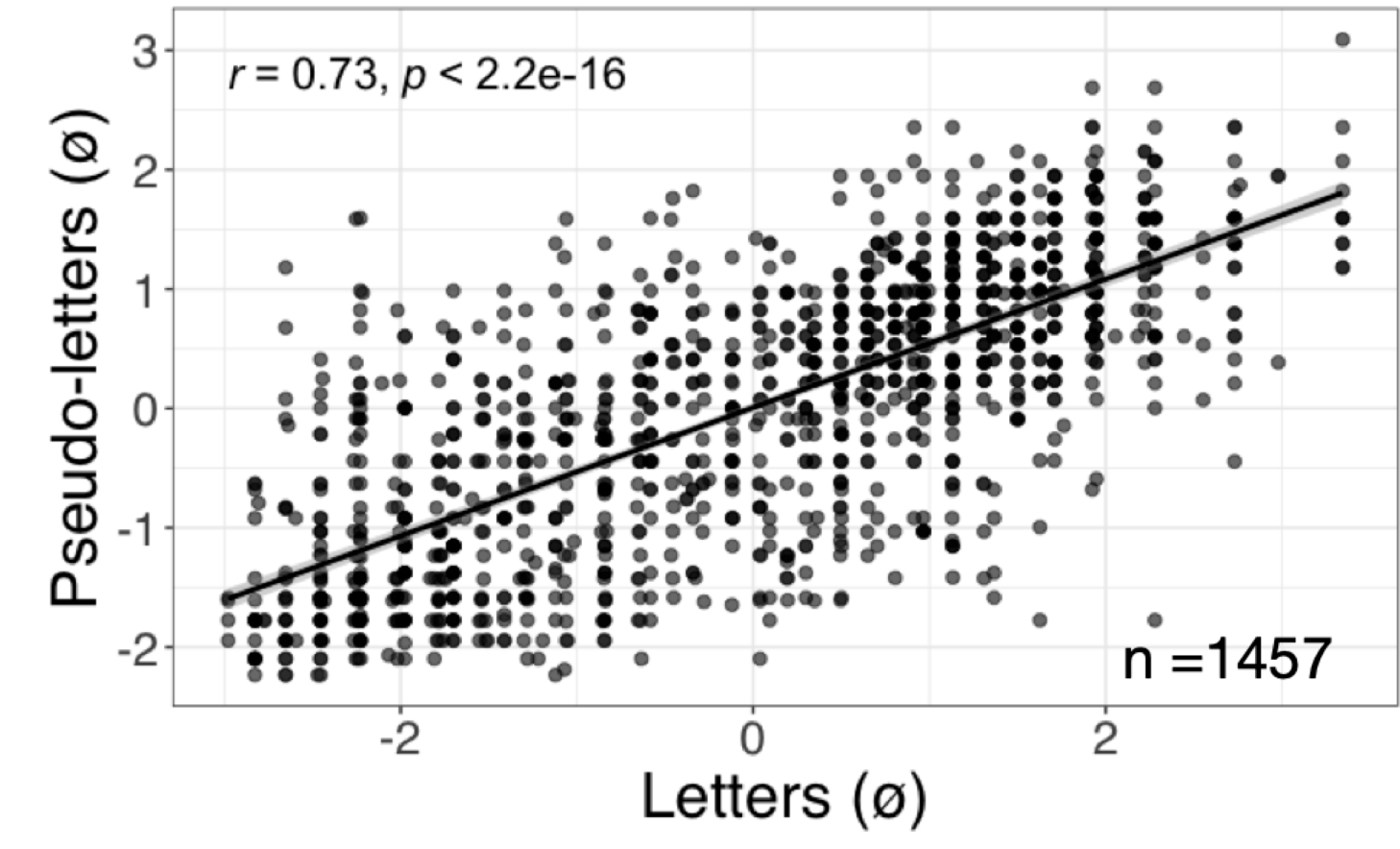

In the next iteration, we added a 2-element string in addition to 4- and 6- element strings. We further reduced redundancy by removing an encoding time of 480 ms that did not increase accuracy. An encoding period 240 ms ensures that encoding occurs without making a saccadic eye movement (Li, Hanning, and Carrasco 2021). Overall task performance increased significantly compared to Studies 1 and 2. Performance in Study 3 (40.429% +/- 1.0368) is comparable to the overall performance reported in a previous study with cross-sectional data (n=185) (Ramamurthy, White, and Yeatman 2024), where overall task performance for 6 to 17 yr olds in the MEP task with an encoding time of 120ms and a string length of 6 elements was 37.148% +/- 1.191. Overall task reliability was comparable between Study 3 (r = 0.8) and Study 2 (r = 0.802) see Figure Figure 20.3 below.

20.2.2.4 IRT to model item difficulty levels and to optimize task parameters

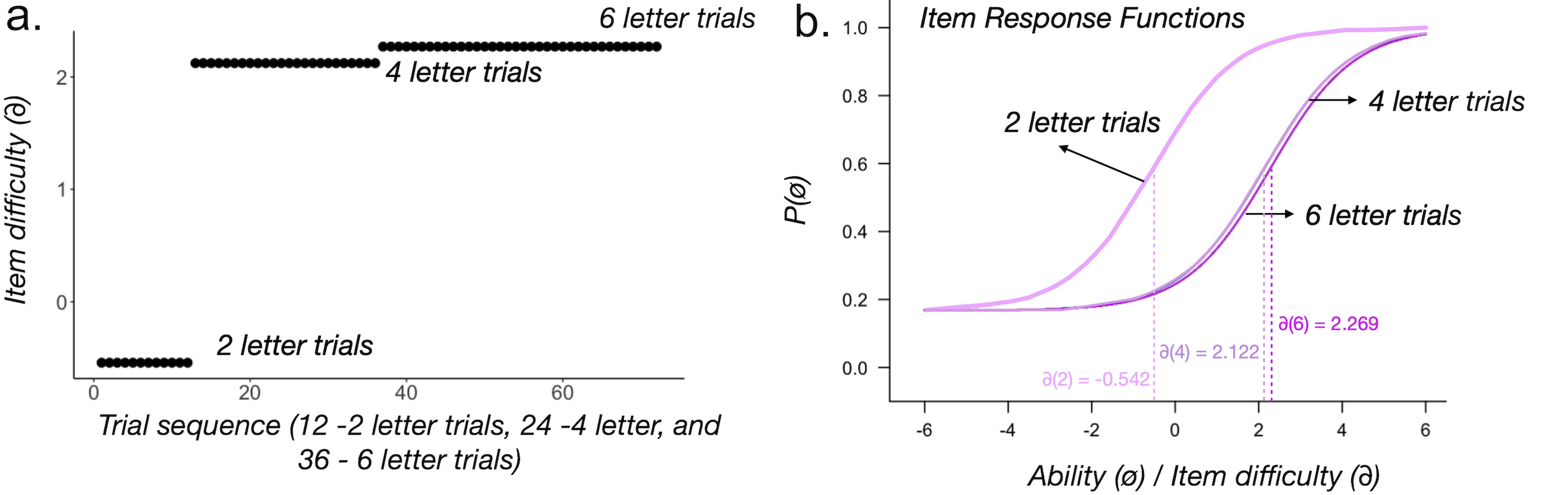

Data from Study 3 (N = 175) was used to calibrate an Item Response Theory (IRT) model. Trials with different string lengths were blocked (twelve 2-letter trials, twenty four 4-letter trials and thirty six 6-letter trials) respectively. The goal of IRT is to place item difficulty (blocks of different string lengths) on an interval scale. The Rasch model (1 parameter logistic with a guess rate fixed at 0.167) was fit to the response data for the 3 item types (constraining difficulty for repeated trials with the same string lengths) for all 175 participants using the MIRT package in R (Philip Chalmers 2012). Figure 20.4 shows item difficulty for each block (a) and item response functions for all three blocks of different string lengths (b).

20.2.2.5 Final Optimized version

As a first step towards reducing redundancy, we can shorten the task by eliminating the thirty six 6-element trials completely and used 2- and 4- element trials with an encoding time to 240ms. Further, for efficient task administration we built a simple transition rule and a termination rule. At the end of the 2-element block if children get 4 or more trials correct (>=4/24) then they transition to the next item difficulty and complete eight 4-letter trials. If children perform at or below chance then the task terminates after twenty four 2-letter trials.