ROAR-Sentence is a timed measure and the score is computed as the number of correct trials minus the number of incorrect trials in the alloted period time window. Originally, ROAR-Sentence was 3 minutes long but (Yeatman et al. 2024) demonstrated the cutting the time in half to 90 seconds had very little impact on reliability and validity of the measure. ROAR-Sentence consists of a collection of equated test forms where sentences are presented in a fixed order. We first report our methodology for equating test forms (Section 17.1) and then report alternate form reliability (Section 17.3).

Equating ROAR-Sentence test forms

ROAR-Sentence consists of multiple parallel forms co-developed by human researchers and generative AI. Generative AI greatly reduces the time and resources required to create multiple tests forms, and (Zelikman 2023) has shown that the quality of AI-generated forms is highly comparable to those created by humans. These test forms were equated using equipercentile equating through the equate package.

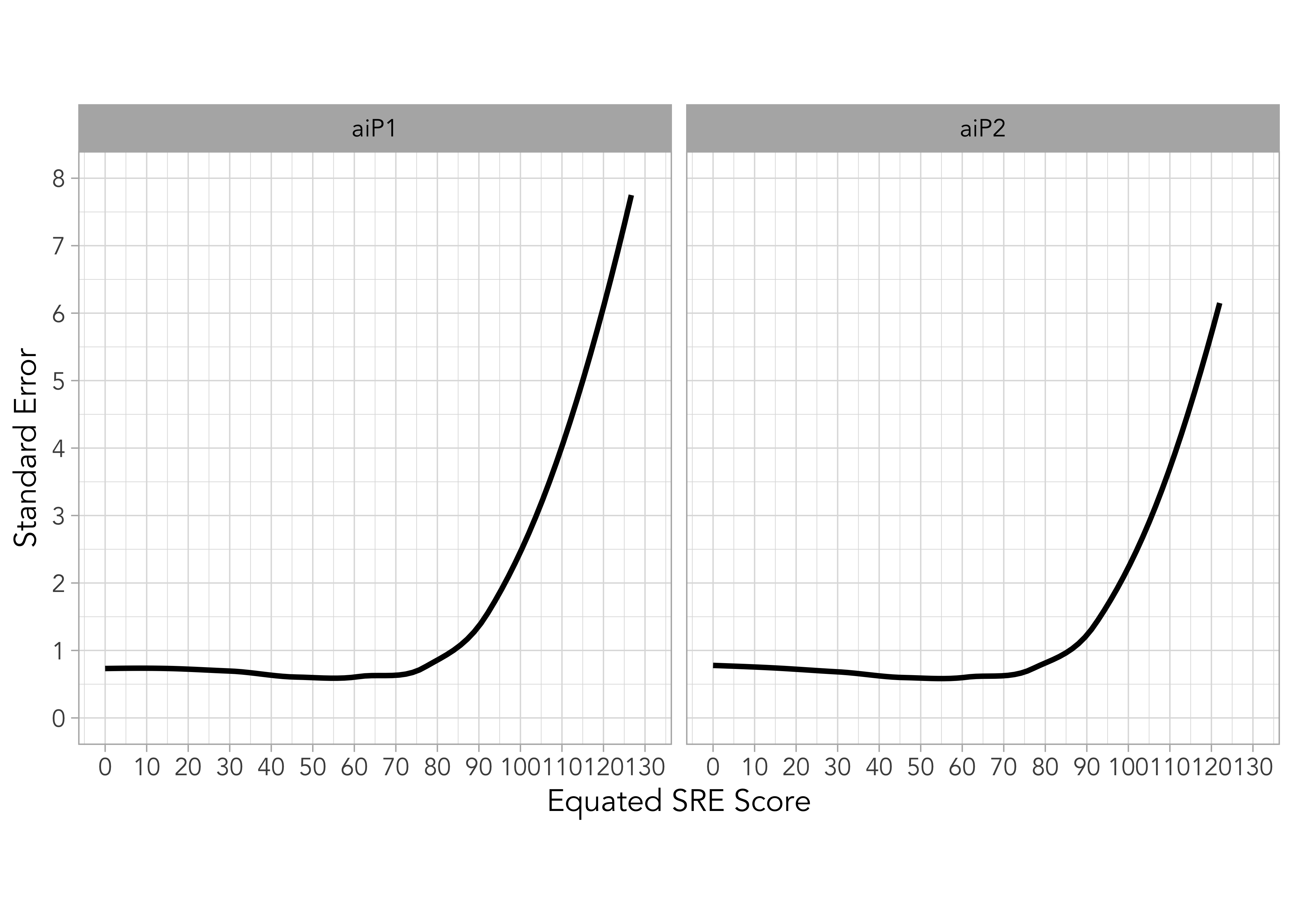

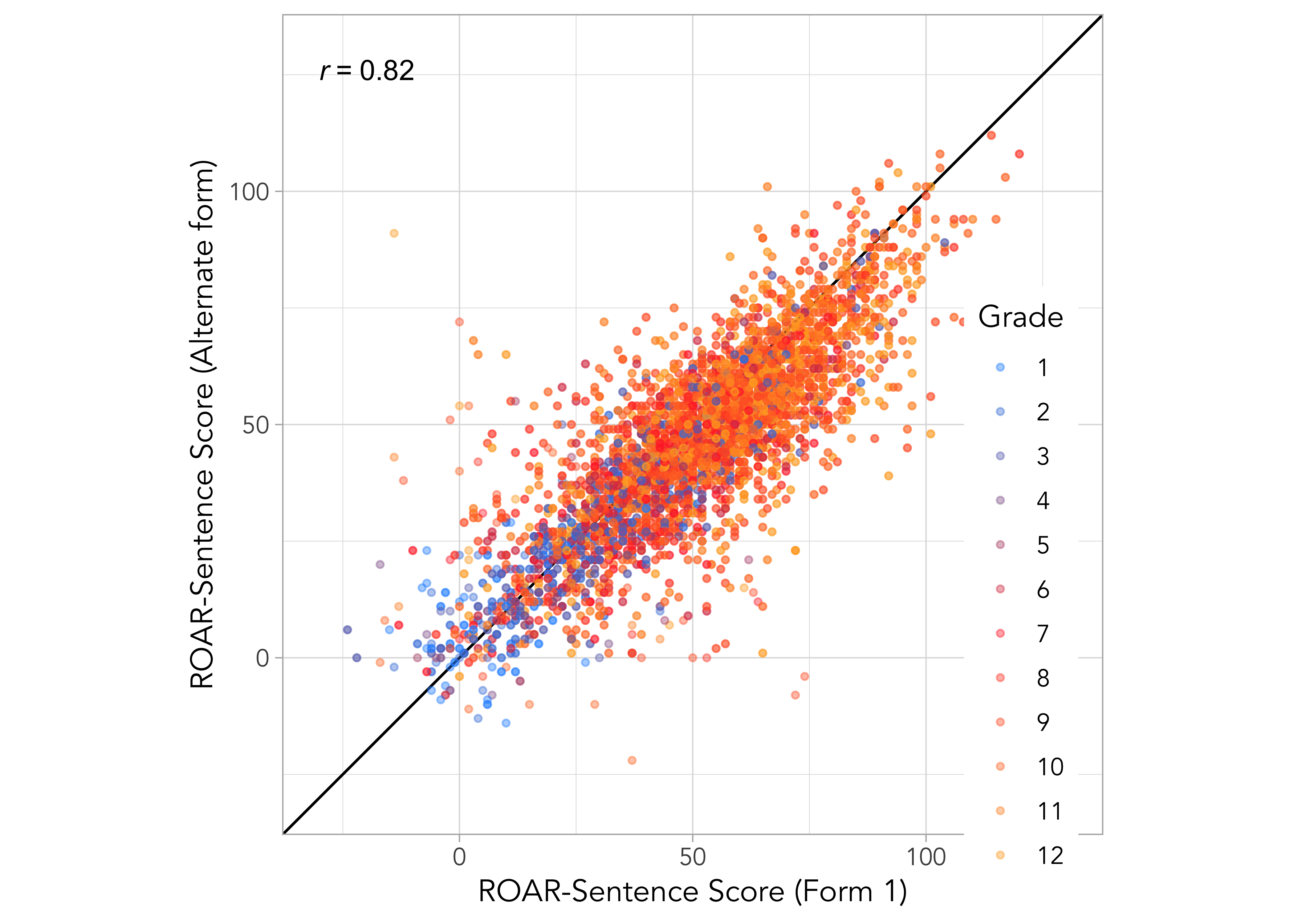

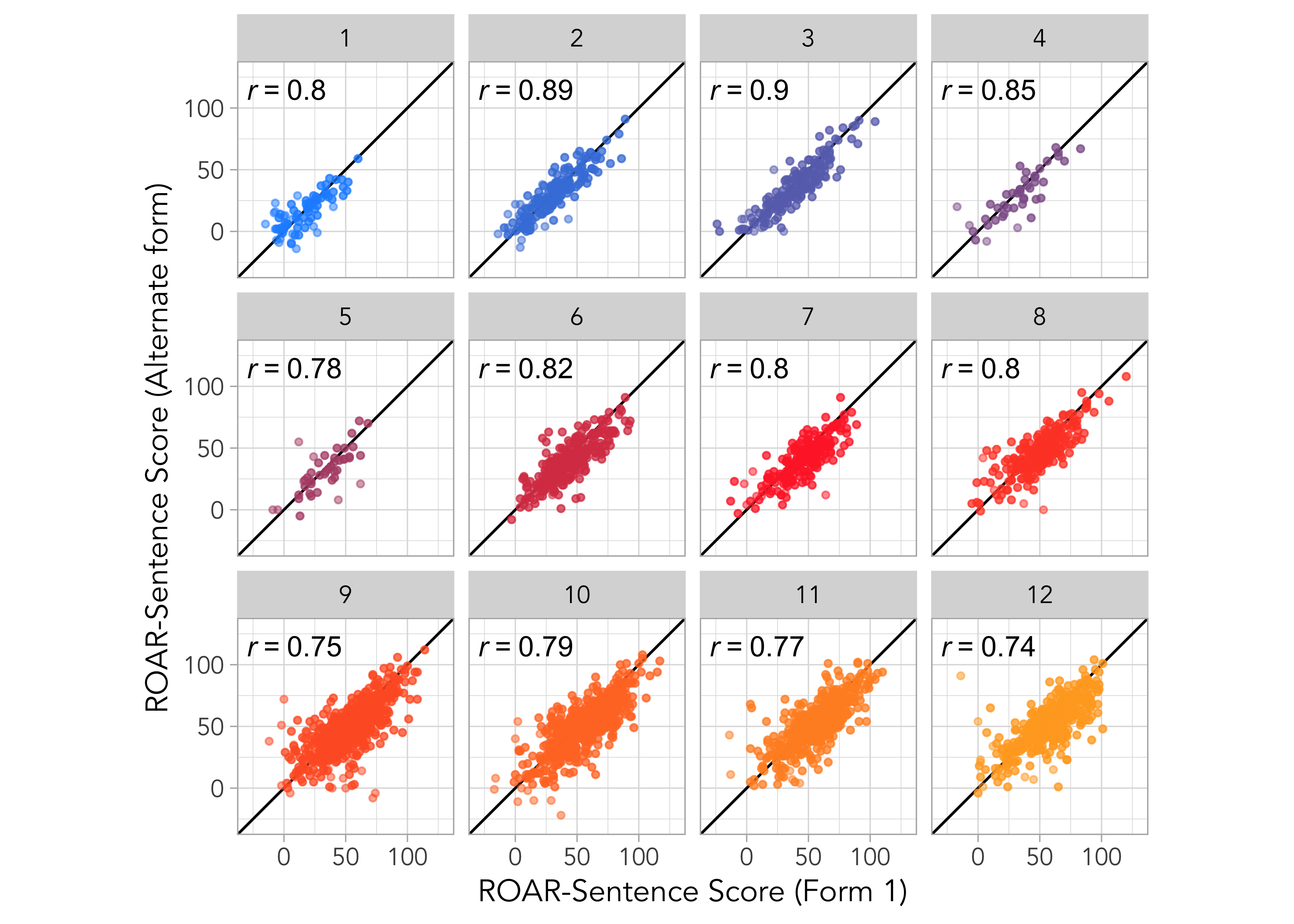

Figure 17.1 a) shows the equated scores across the test forms. Equating enables ROAR-Sentence to randomly select from multiple available test forms, thereby minimizing the potential for practice effects when students encounter the same test form across different testing windows. Figure 17.2 b) provides a separate plot showing the standard error of the equipercentile equating.

Criteria for identifying disengaged participants and flagging unreliable scores

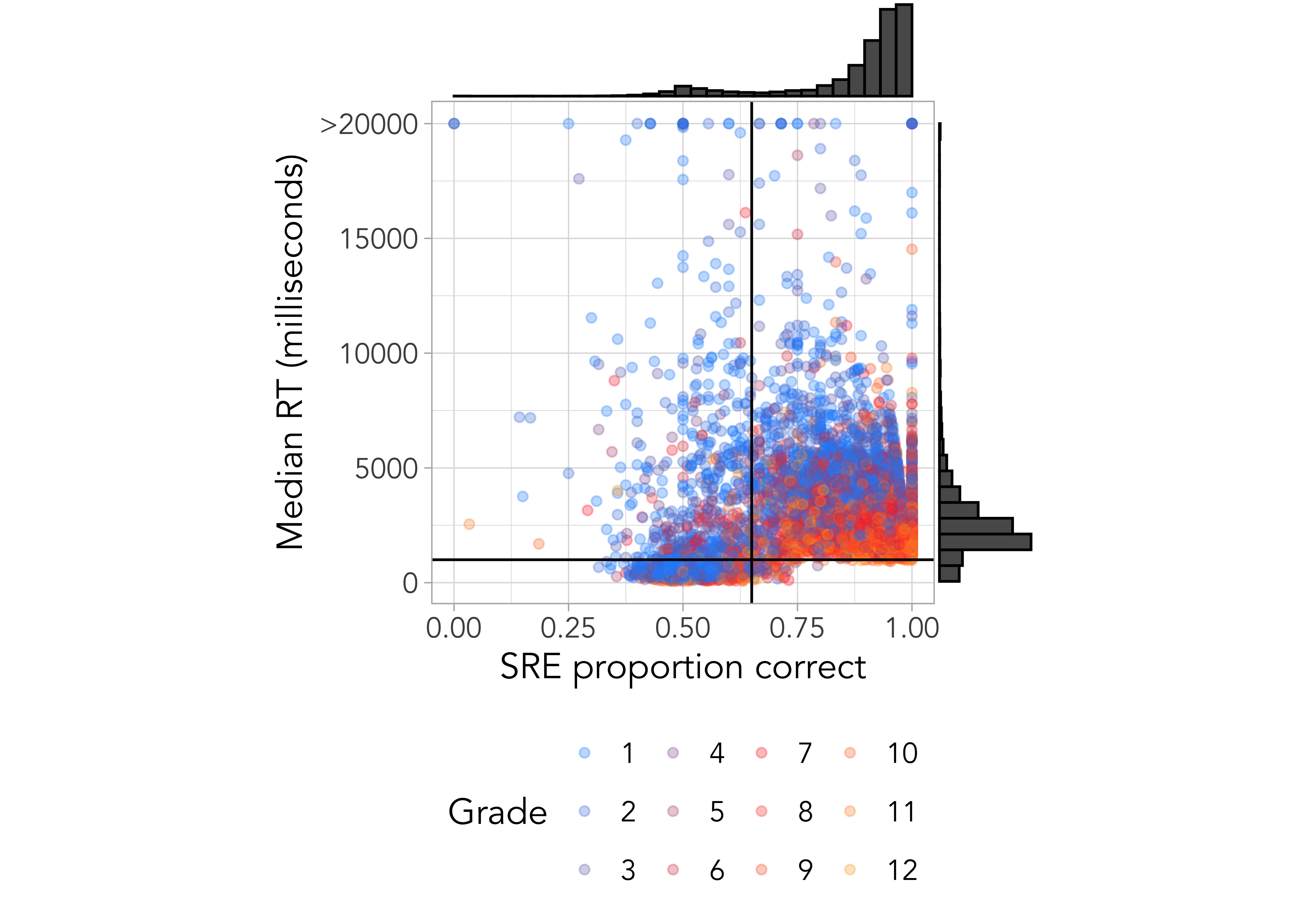

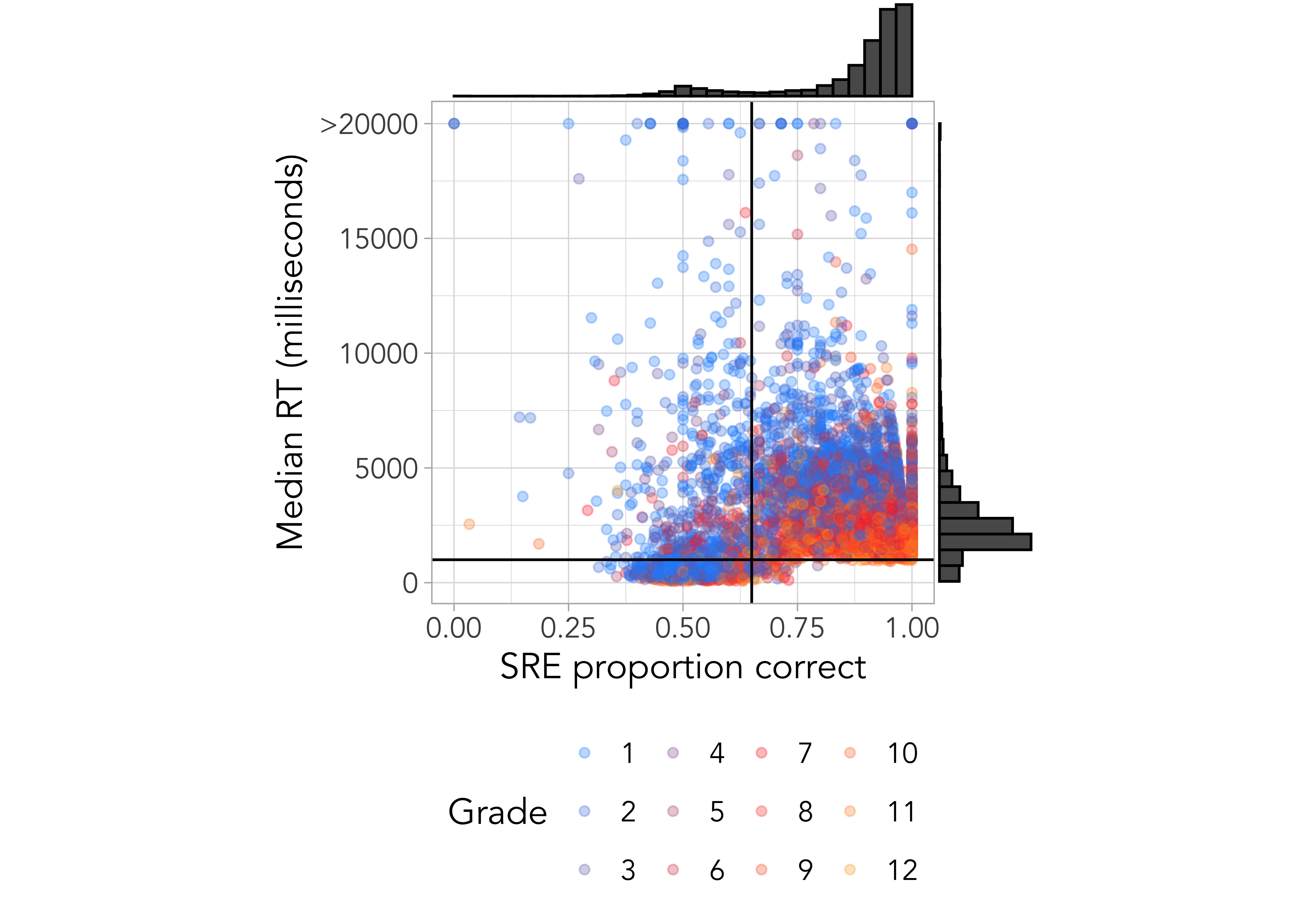

ROAR-Sentence is designed to be totally automated: reading is done silently, responses are non-verbal, instructions and practice trials are narrated by characters, and scoring is done automatically in real time. This makes it possible to efficiently assess a whole district simultaneously. A concern about automated assessments is that without a teacher to individually administer items, monitor, and score responses, some students might disengage and provide data that is not representative of their true ability. For a measure like ROAR-Sentence where items are designed and validated to have an unambiguous and clear answer, disengaged participants can be detected based on fast and innacurate responses. Our approach to identifying and flagging disegnaged participants with unreliable scores was published in (Yeatman et al. 2024). Figure 17.3 shows a plot of median response time (RT) versus proportion correct for each participant. Most participants were very accurate (>90% correct responses). However there was a bimodal distribution indicating a small group of participants who were performing around chance. These participants also had extremely fast response times.

Participants with a median response time <1,000ms AND low accuracy (<65% correct) are flagged as unreliable scores in ROAR score reports and are excluded from analyses since scores do not accurately represent the participant’s ability. Teachers can choose whether to re-administer ROAR or interpret data cautiously in relation to other data sources and contextual factors.

References

Yeatman, Jason D, Jasmine E Tran, Amy K Burkhardt, Wanjing A Ma, Jamie L Mitchell, Maya Yablonski, Liesbeth Gijbels, Carrie Townley-Flores, and Adam Richie-Halford. 2024.

“Development and Validation of a Rapid and Precise Online Sentence Reading Efficiency Assessment.” Frontiers in Education 9: 1494431.

https://doi.org/10.3389/feduc.2024.1494431.

Zelikman, Wanjing and Tran, Eric and Ma. 2023.

“Generating and Evaluating Tests for K-12 Students with Language Model Simulations: A Case Study on Sentence Reading Efficiency.” In

Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, edited by Juan and Bali Bouamor Houda and Pino, 2190–2205. Singapore: Association for Computational Linguistics.

https://doi.org/10.18653/v1/2023.emnlp-main.135.